Facebook is constantly changing. But one feature deserves a high five - its suicide prevention technology.

In 2015 Facebook partnered with organisations like the National Suicide Prevention Lifeline and Now Matters to explore ways to prevent suicide. Following some research and collaboration, Facebook rolled out a feature that allows people to ‘report’ a post that indicates possible self harm or suicidal thoughts. Shortly, the tech giant will roll out its robots to recognise when someone is considering taking their life - a great example of how technology can literally save lives. But it does raise the important question - should we literally put the lives of humans in the hands of the bots?

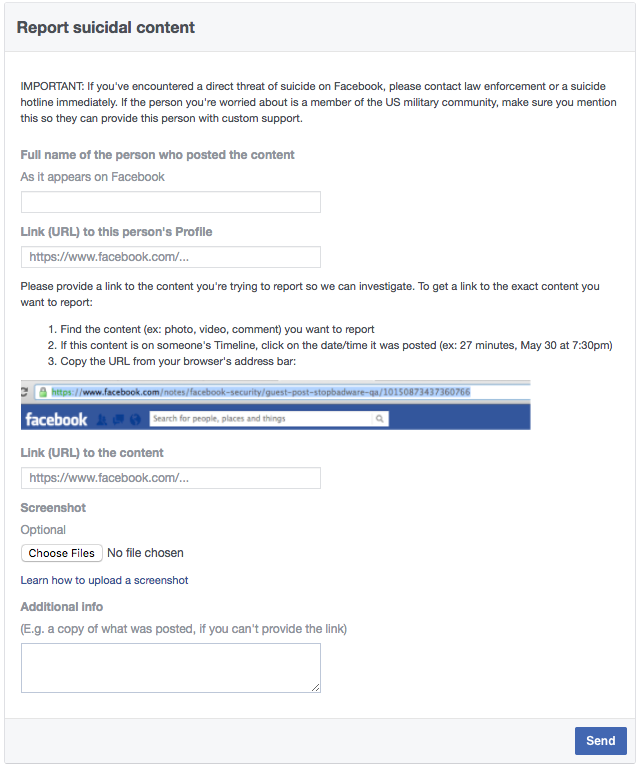

ABOVE: How to report suicide thoughts and self harm on Facebook

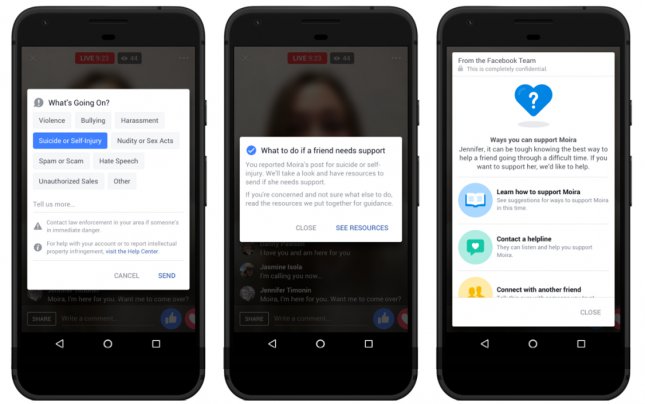

This year, Facebook have continued to develop their reporting features, allowing users to report live streams and content on Facebook messenger. Previously it was just 'posts' that could be reported. When they first announced this development, many people asked why Facebook would even allow the streaming of suicidal themes with some saying it is best to 'cut the live stream'. Facebook quickly responded:

“Some might say we should cut off the live stream, but what we’ve learned is cutting off the stream too early could remove the opportunity for that person to receive help,” - Jennifer Guadagno, Facebook Researcher

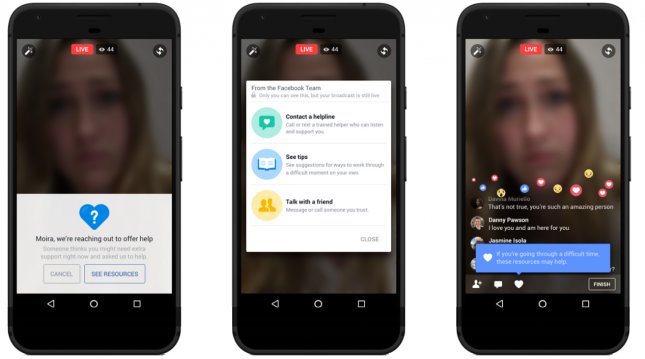

Obviously if a live-stream was displaying graphic content, Facebook would take it down as soon as possible, however it is comforting to know that they are thinking of the long term benefits of their reporting features and to some degree, allowing these vulnerable people to carry out their 'call for help'. The viewer of the video can quickly alert Facebook and the Facebook team sends a message to the vulnerable individual on the live-stream with a caring message stating that someone is worried for them. It also sends a message to the person who created the alert and provides ways to support or help their Facebook friend.

In the US they are currently testing an update which includes the use of AI technology and clever algorithms to spot patterns. Put simply, Facebook are starting to automatically detect if someone is expressing suicidal thoughts through their site.

The flagged post is automatically sent through to a Facebook ‘Community Operations Team’ that reviews the post, and if they deem it necessary, they will contact that person and offer support and signposting. This new technology doesn’t rely on any possible ‘human error’ regarding failure to spot the warning signs. Facebook said it “might be possible in the future” to bring this sort of AI and pattern recognition to Facebook Live and Messenger, but the team said it’s too early to say for sure.

What do other social media sites do?

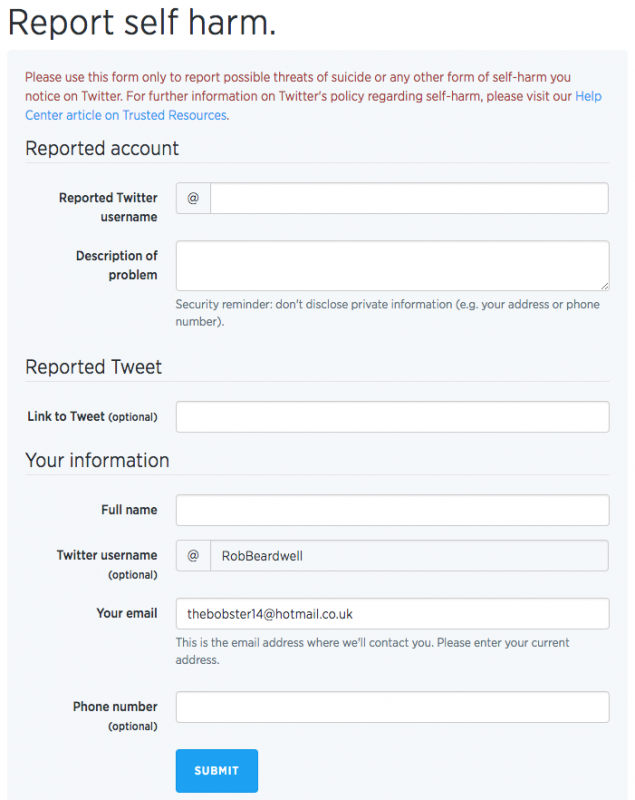

Similarly, Twitter allows users to flag content that implies self harm, with that tweet being sent to a team “devoted to handling threats of self-harm or suicide”. This team will then reach out to the person at-risk and offer support, guidance and encouragement to pursue further support. Crucially though, similar to Facebook, this team will let that person know that someone has expressed concern for them which has put the team into action.

On Instagram users have the option to report an image for self harm or suicidal content. This will lead to the content being taken down by Instagram and although there isn’t much concrete proof, their website does say they will reach out to the person at risk and offer guidance, resources and support.

Snapchat does not have a way for users to report suicidal content, but you can fill out a safety concern form on their website. The at-risk person is then given contact information for suicide hotlines with words of encouragement to seek further help.

Instagram and Twitter are following the blue footsteps closely with simple reporting features that are quick and easy, however they don’t match up to Facebook’s upcoming automatic detection technology that takes all responsibility out of the users hands and removes any and all instances of human error.

What we need to ensure with the roll out of this great technology is that machines do not replace the duty of human beings to spot the signs of diminishing mental health in our friends and family. We can care. Robots can't. However, in this fast paced, busy world when it is easy to get lost in the sea of social media posts I think this is a great example of how humans and machines can work together for the greater good.

If you agree, then here is a quick list of what to look out for on social media (so we don't leave it all to the machines).

High-risk warning signs

These are comfortably in the realm of common sense when it comes to spotting suicidal tendencies.

A person may be high risk of attempting suicide if they:

- Threaten to hurt to kill themselves

- Talk or write about death, dying or suicide

- Actively look for ways to kill themselves

Other warning signs

These warning signs are sometimes difficult to spot, especially if you don’t know you should be looking out for them.

A person may be at risk or attempting suicide if they:

- Complain of feelings of hopelessness

- Have episodes of sudden rage and anger

- Act recklessly and engage in risky activities with an apparent lack of concern about the consequences

- Talk about feeling trapped, such as saying they can’t see any way out of their current situation

- Self-harm, including misusing drugs or alcohol, or using more than they usually do

- Noticeable gain or lose weight due to a change in their appetite

- Become increasingly withdrawn from friends, family and society in general

- Appear anxious and agitated

- Are unable to sleep or they sleep all the time

- Have sudden mood swings, a sudden lift in mood after a period of depression could indicate they have made the decision to attempt suicide

- Talk and act in a way that suggests their life has no sense of purpose

- Lose interest in most things, including their appearance

- Put their affairs in order, such as sorting out possessions or making a will